Mysql Logical Backup using mydumper – Export

In this article, we are going to demonstrate Mysql Logical Backup using mydumper – Export.

There are several ways to take the backup of MySQL data.

Those are: –

1) Physical Backup

2) Logical Backup

Physical Backup :

It copies the directories and its files that have database contents. Physical backup is also called Raw Backup.

Logical Backup:

It converts all the available rows into single or multiple inserts and also contains create statements to create databases, tables, triggers, and so on. Logical backup also called Text Backup.

List of utilities available for logical backup: –

A) Mysqldump Click here to read more

B) Mysqlpump Click here to read more

C) Mydumper

D) Mysqlshell Click here to read more

mydumper :

Mydumper/Myloader is not available by default. We need to download it from Github and install it. Generally, it will be useful for very big tables not for the smaller ones.

The utilities like mysqldump/mysqlpump use a single thread at the time of dumpfile import. But mydumper has an additional toolset called myloader and it comes along with mydumper package. Myloader uses multiple threads to speed up the data restoration/recovery process.

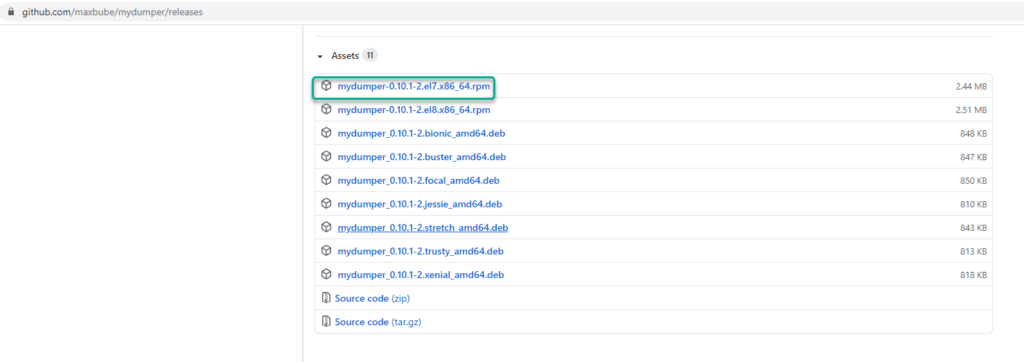

Click here to download mydumper from Github, Since I am using OEL 7.10 I will download version 7. Please refer to the below image. Once rpm is downloaded transfer to the mysql server and install using rpm command.

[root@test-machine01 tmp]# rpm -ivh mydumper-0.9.5-2.el7.x86_64.rpm

Preparing... ################################# [100%]

Updating / installing...

1:mydumper-0.9.5-2 ################################# [100%]

[root@test-machine01 tmp]#

After mydumper is installed we have to create a new user account to access mydumper utility as if we try to access it with the root account will receive the below error. I will use an admin account

[root@test-machine01 tmp]# mydumper

** (mydumper:83956): CRITICAL **: 12:52:47.274: Error connecting to database: Authentication plugin 'caching_sha2_password' cannot be loaded: /usr/lib64/mysql/plugin/caching_sha2_password.so: cannot open shared object file: No such file or directory

mysql> create user 'admin'@'localhost' identified by 'Root@1234';

Query OK, 0 rows affected (1.05 sec)

mysql> grant all privileges on *.* to 'admin'@'localhost' ;

Query OK, 0 rows affected (0.25 sec)

mysql>

mysql> alter user 'admin'@'localhost' identified with mysql_native_password BY 'Root@1234';

Query OK, 0 rows affected (0.11 sec)

Full DB backup : Use below command to take full backup. Please note you need to specify directory for each backup. You can get full options list from –help command.

[root@test-machine01 ~]# mydumper --help

[root@test-machine01 ~]# myloader --help

[root@test-machine01 fulldb]# mydumper -h localhost -u admin -p Root@1234 -t 2 --rows=50000 -v 3 --outputdir /u01/backup3/fulldb --logfile /u01/backup3/fulldb/fulldb.log

[root@test-machine01 fulldb]# ls -ltr

total 170504

-rw-r--r--. 1 root root 133 Feb 13 15:59 employees-schema-create.sql

-rw-r--r--. 1 root root 129 Feb 13 15:59 mysql-schema-create.sql

-rw-r--r--. 1 root root 133 Feb 13 15:59 repl_test-schema-create.sql

-rw-r--r--. 1 root root 127 Feb 13 15:59 sys-schema-create.sql

-rw-r--r--. 1 root root 351 Feb 13 15:59 employees.departments.sql

Options Used :

-t, --threads Number of threads to use, default 4

-v, --verbose Verbosity of output, 0 = silent, 1 = errors, 2 = warnings, 3 = info, default 2

-r, --rows Try to split tables into chunks of this many rows. This option turns off --chunk-filesize

-F, --chunk-filesize Split tables into chunks of this output file size. This value is in MB

Single DB Backup : Use below command to take single db backup. You can specify either –rows or –chunk-filesize to control dumpfiles.

[root@test-machine01 singledb]# mydumper -h localhost -u admin -p Root@1234 -t 2 --rows=50000 --database employees -v 3 --outputdir /u01/backup3/singledb --logfile /u01/backup3/singledb/singledb.log

[root@test-machine01 singledb]# ls -ltr

total 168424

-rw-r--r--. 1 root root 133 Feb 14 12:02 employees-schema-create.sql

-rw-r--r--. 1 root root 351 Feb 14 12:02 employees.departments.sql

-rw-r--r--. 1 root root 862968 Feb 14 12:02 employees.dept_emp.00001.sql

-rw-r--r--. 1 root root 2613818 Feb 14 12:02 employees.dept_emp.00002.sql

-rw-r--r--. 1 root root 3791352 Feb 14 12:02 employees.dept_emp.00000.sql

[root@test-machine01 test]# mydumper -h localhost -u admin -p Root@1234 -t 3 --chunk-filesize 10 --database employees -v 3 --outputdir /u01/backup3/test --logfile /u01/backup3/test/singledb.log

[root@test-machine01 test]# ls -ltr

total 168340

-rw-r--r--. 1 root root 133 Feb 14 12:00 employees-schema-create.sql

-rw-r--r--. 1 root root 351 Feb 14 12:00 employees.departments.sql

-rw-r--r--. 1 root root 1168 Feb 14 12:00 employees.dept_manager.sql

-rw-r--r--. 1 root root 10999800 Feb 14 12:00 employees.employees.00001.sql

-rw-r--r--. 1 root root 10999908 Feb 14 12:00 employees.dept_emp.00001.sql

Compressed DB backup: It will create a single file for each table as no –rows or –chunk-filesize option specified. You can see the difference after using compression option in dumpfile size.

[root@test-machine01 compdb]# mydumper -h localhost -u admin -p Root@1234 -t 3 --compress --database employees -v 3 --outputdir /u01/backup3/compdb --logfile /u01/backup3/compdb/compdb.log

[root@test-machine01 compdb]# ls -ltr

total 35128

-rw-r--r--. 1 root root 136 Feb 14 12:09 employees-schema-create.sql.gz

-rw-r--r--. 1 root root 403 Feb 14 12:09 employees.dept_manager.sql.gz

-rw-r--r--. 1 root root 259 Feb 14 12:09 employees.departments.sql.gz

-rw-r--r--. 1 root root 2464150 Feb 14 12:09 employees.dept_emp.sql.gz

[root@test-machine01 backup3]# du -sh compdb

35M compdb

[root@test-machine01 backup3]# du -sh singledb

165M singledb

[root@test-machine01 backup3]#

Multiple DB Backup : Multile DB backup is not possible in mydumper need to take single db backup in each command.

[root@test-machine01 multidb]# mydumper -h localhost -u admin -p Root@1234 -t 3 --chunk-filesize 20 --database employees -v 3 --outputdir /u01/backup3/multidb --logfile /u01/backup3/multidb/multidb.log

[root@test-machine01 multidb]# mydumper -h localhost -u admin -p Root@1234 -t 3 --chunk-filesize 20 --database repl_test -v 3 --outputdir /u01/backup3/multidb --logfile /u01/backup3/multidb/multidb.log

Multiple Table Backup : Use below command to take table level backup

[root@test-machine01 tablebkp]# mydumper -h localhost -u admin -p Root@1234 -t 4 --database employees --tables-list employees,departments,salaries -v 3 --outputdir /u01/backup3/tablebkp --logfile /u01/backup3/tablebkp/tablebkp.log

[root@test-machine01 tablebkp]# ls -ltr

total 133248

-rw-r--r--. 1 root root 133 Feb 14 12:37 employees-schema-create.sql

-rw-r--r--. 1 root root 332 Feb 14 12:37 employees.departments-schema.sql

-rw-r--r--. 1 root root 415 Feb 14 12:37 employees.employees-schema.sql

-rw-r--r--. 1 root root 351 Feb 14 12:37 employees.departments.sql

-rw-r--r--. 1 root root 447 Feb 14 12:37 employees.salaries-schema.sql

-rw-r--r--. 1 root root 17722965 Feb 14 12:37 employees.employees.sql

-rw-r--r--. 1 root root 118693182 Feb 14 12:37 employees.salaries.sql

-rw-r--r--. 1 root root 1317 Feb 14 12:37 tablebkp.log

-rw-r--r--. 1 root root 133 Feb 14 12:37 metadata

[root@test-machine01 tablebkp]#

A regular expression for DB Backup: You can use a regular expression to include or exclude DB from backup. In the below example all system schemas are excluded from backup only user schema will be backed up.

Also, note we are using option –ask-password if you don’t want to mention password in the backup command.

[root@test-machine01 regtablebkp]# mydumper -h localhost -u admin --ask-password --regex='^(?!(mysql|information_schema|performance_schema|sys))' --events --triggers --routines --outputdir=/u01/backup3/regtablebkp --logfile /u01/backup3/regtablebkp/regtablebkp.log

Enter MySQL Password:

[root@test-machine01 regtablebkp]# ls -ltr

total 168348

-rw-r--r--. 1 root root 0 Feb 14 13:03 regtablebkp.log

-rw-r--r--. 1 root root 133 Feb 14 13:03 employees-schema-create.sql

-rw-r--r--. 1 root root 133 Feb 14 13:03 repl_test-schema-create.sql

-rw-r--r--. 1 root root 351 Feb 14 13:03 employees.departments.sql

-rw-r--r--. 1 root root 1168 Feb 14 13:03 employees.dept_manager.sql

-rw-r--r--. 1 root root 14160012 Feb 14 13:03 employees.dept_emp.sql

Only Schema no data : If you want to take only metadata backup.

[root@test-machine01 schemaonlydb]# mydumper -h localhost -u admin --ask-password -t 3 --database employees --no-data -v 3 --outputdir /u01/backup3/schemaonlydb --logfile /u01/backup3/schemaonlydb/schemaonlydb.log

Enter MySQL Password:

[root@test-machine01 schemaonlydb]#

[root@test-machine01 schemaonlydb]# ls -ltr

total 52

-rw-r--r--. 1 root root 133 Feb 14 13:10 employees-schema-create.sql

-rw-r--r--. 1 root root 332 Feb 14 13:10 employees.departments-schema.sql

-rw-r--r--. 1 root root 602 Feb 14 13:10 employees.dept_manager-schema.sql

Only data no schema: If you want to take only data backup without metadata.

[root@test-machine01 dataonly]# mydumper -h localhost -u admin --ask-password -t 3 --database employees --no-schemas -v 3 --outputdir /u01/backup3/dataonly --logfile /u01/backup3/dataonly/dataonly.log

Enter MySQL Password:

[root@test-machine01 dataonly]# ls -ltr

total 168272

-rw-r--r--. 1 root root 351 Feb 14 13:13 employees.departments.sql

-rw-r--r--. 1 root root 1168 Feb 14 13:13 employees.dept_manager.sql

-rw-r--r--. 1 root root 14160012 Feb 14 13:13 employees.dept_emp.sql

-rw-r--r--. 1 root root 17722965 Feb 14 13:13 employees.employees.sql

Part II : Mysql Logical Backup using myloader – Import

This document is only for learning purpose and always validate in the LAB environment first before applying in the LIVE environment.

Hope so you like this article!

Please share your valuable feedback/comments/subscribe and follow us below and don’t forget to click on the bell icon to get the latest update. Click here to know more about our pursuit.

![]()