Steps to Upgrade Grid Infra – Standalone (GI) and Oracle Database from 12.2 to 19.14

In recent two blogs, we discuss Applying the latest JAN-22 RU 33583921 on Grid Infra – Standalone and Database Home (OH) 12.2 Click here to read more. & Steps to Apply Database and Grid Infrastructure RU Patch Before Grid Infrastructure Configuration Click here to read more.

Above both steps are necessary to proceed with GI and DB upgrade to 19.14 as before we start an upgrade process, Oracle strongly recommends that we Patch both GI & DB earlier release and new release Oracle Database and GI software. So in the above blogs, we already applied latest Patch JAN-22 RU 33583921 on GI and DB 12.2 Version and already installed alongwith configured GI and DB home 19.3 and applied the latest Patch JAN-22 RU 33509923 on GI and DB 19.3 Version.

Below are the high-level steps we will follow.

Step 1. Validate 12.2 GI

Step 2. Run precheck

Step 3. Upgrade GI from 12.2 to 19.14

Step 4. Validate GI upgrade to 19.14

Step 5. Download autoupgrade

Step 6. Create autoupgrade configuration file

Step 7. Upgrade database from 12.2 to 19.14 using autoupgrade

Step 8. Validate DB upgrade to 19.14

Below are setup details and the same will be used in this demonstration.

| Grid Home 12.2 | /u01/app/12.2/grid |

| Oracle DB Home 12.2 | /u01/app/oracle/product/12.2/db_1 |

| Grid Home 19.14 | /u01/app/19.0/grid |

| Oracle DB Home 19.14 | /u01/app/oracle/product/19.0/db_1 |

| Non-CDB DB Name | NONCDB |

| CDB DB Name | ORCL |

| Autoupgrade Location | /u01/19c_dbupgrade |

Step 1. Validate 12.2 GI: Crosscheck all services are online in the grid cluster. Use the below commands to check cluster version and services status.

[grid@test-machine03 bin]$

[grid@test-machine03 bin]$ pwd

/u01/app/12.2/grid/bin

[grid@test-machine03 bin]$ ./crsctl query has releaseversion

Oracle High Availability Services release version on the local node is [12.2.0.1.0]

[grid@test-machine03 bin]$

[grid@test-machine03 bin]$ ./crsctl query has softwareversion

Oracle High Availability Services version on the local node is [12.2.0.1.0]

[grid@test-machine03 bin]$

[grid@test-machine03 bin]$

[grid@test-machine03 bin]$ ./crsctl check has

CRS-4638: Oracle High Availability Services is online

[grid@test-machine03 bin]$

[grid@test-machine03 bin]$

[grid@test-machine03 bin]$ ./crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE test-machine03 STABLE

ora.FRA.dg

ONLINE ONLINE test-machine03 STABLE

ora.LISTENER.lsnr

ONLINE ONLINE test-machine03 STABLE

ora.asm

ONLINE ONLINE test-machine03 Started,STABLE

ora.ons

OFFLINE OFFLINE test-machine03 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.cssd

1 ONLINE ONLINE test-machine03 STABLE

ora.diskmon

1 OFFLINE OFFLINE STABLE

ora.evmd

1 ONLINE ONLINE test-machine03 STABLE

ora.noncdb.db

1 ONLINE ONLINE test-machine03 Open,HOME=/u01/app/o

racle/product/12.2/d

b_1,STABLE

ora.orcl.db

1 ONLINE ONLINE test-machine03 Open,HOME=/u01/app/o

racle/product/12.2/d

b_1,STABLE

--------------------------------------------------------------------------------

[grid@test-machine03 bin]$

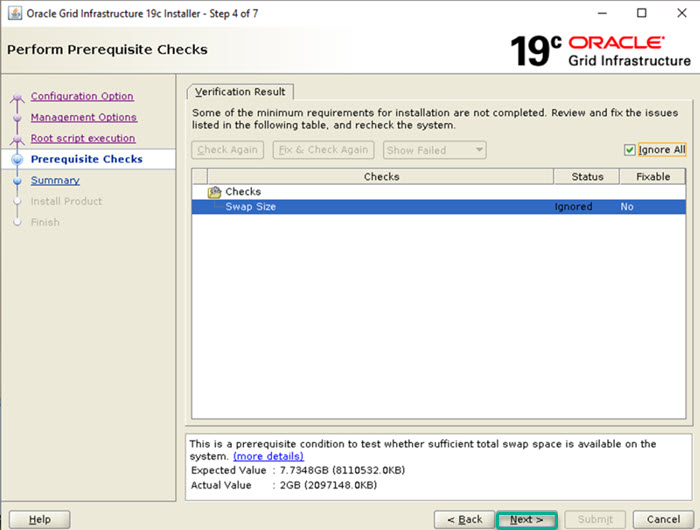

Step 2. Run precheck: Run runcluvfy.sh command with option -pre hacfg make sure all checks are PASSED. We are going to ignore the Swap Size check.

[grid@test-machine03 ~]$

[grid@test-machine03 ~]$ cd /u01/app/19.0/grid/

[grid@test-machine03 grid]$

[grid@test-machine03 grid]$ pwd

/u01/app/19.0/grid

[grid@test-machine03 grid]$

[grid@test-machine03 grid]$ ./runcluvfy.sh stage -pre hacfg

Verifying Physical Memory ...PASSED

Verifying Available Physical Memory ...PASSED

Verifying Swap Size ...FAILED (PRVF-7573)

Verifying Free Space: test-machine03:/usr,test-machine03:/var,test-machine03:/etc,test-machine03:/sbin,test-machine03:/tmp ...PASSED

Verifying User Existence: grid ...

Verifying Users With Same UID: 1100 ...PASSED

Verifying User Existence: grid ...PASSED

Verifying Group Existence: dba ...PASSED

Verifying Group Membership: dba ...PASSED

Verifying Run Level ...PASSED

Verifying Architecture ...PASSED

Verifying OS Kernel Version ...PASSED

Verifying OS Kernel Parameter: semmsl ...PASSED

Verifying OS Kernel Parameter: semmns ...PASSED

Verifying OS Kernel Parameter: semopm ...PASSED

Verifying OS Kernel Parameter: semmni ...PASSED

Verifying OS Kernel Parameter: shmmax ...PASSED

Verifying OS Kernel Parameter: shmmni ...PASSED

Verifying OS Kernel Parameter: shmall ...PASSED

Verifying OS Kernel Parameter: file-max ...PASSED

Verifying OS Kernel Parameter: ip_local_port_range ...PASSED

Verifying OS Kernel Parameter: rmem_default ...PASSED

Verifying OS Kernel Parameter: rmem_max ...PASSED

Verifying OS Kernel Parameter: wmem_default ...PASSED

Verifying OS Kernel Parameter: wmem_max ...PASSED

Verifying OS Kernel Parameter: aio-max-nr ...PASSED

Verifying OS Kernel Parameter: panic_on_oops ...PASSED

Verifying Package: kmod-20-21 (x86_64) ...PASSED

Verifying Package: kmod-libs-20-21 (x86_64) ...PASSED

Verifying Package: binutils-2.23.52.0.1 ...PASSED

Verifying Package: libgcc-4.8.2 (x86_64) ...PASSED

Verifying Package: libstdc++-4.8.2 (x86_64) ...PASSED

Verifying Package: sysstat-10.1.5 ...PASSED

Verifying Package: ksh ...PASSED

Verifying Package: make-3.82 ...PASSED

Verifying Package: glibc-2.17 (x86_64) ...PASSED

Verifying Package: glibc-devel-2.17 (x86_64) ...PASSED

Verifying Package: libaio-0.3.109 (x86_64) ...PASSED

Verifying Package: nfs-utils-1.2.3-15 ...PASSED

Verifying Package: smartmontools-6.2-4 ...PASSED

Verifying Package: net-tools-2.0-0.17 ...PASSED

Verifying Package: policycoreutils-2.5-17 ...PASSED

Verifying Package: policycoreutils-python-2.5-17 ...PASSED

Verifying Users With Same UID: 0 ...PASSED

Verifying Current Group ID ...PASSED

Verifying Root user consistency ...PASSED

Pre-check for Oracle Restart configuration was unsuccessful.

Failures were encountered during execution of CVU verification request "stage -pre hacfg".

Verifying Swap Size ...FAILED

test-machine03: PRVF-7573 : Sufficient swap size is not available on node

"test-machine03" [Required = 7.7348GB (8110532.0KB) ; Found =

2GB (2097148.0KB)]

CVU operation performed: stage -pre hacfg

Date: Feb 15, 2022 12:09:32 PM

CVU home: /u01/app/19.0/grid/

User: grid

[grid@test-machine03 grid]$

[grid@test-machine03 grid]$

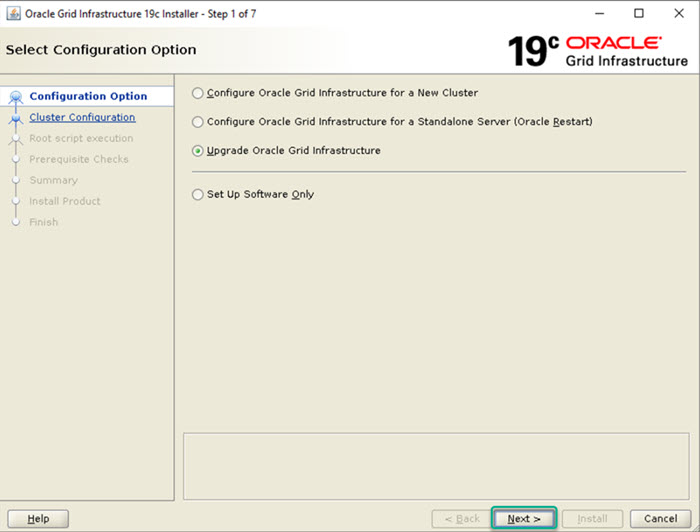

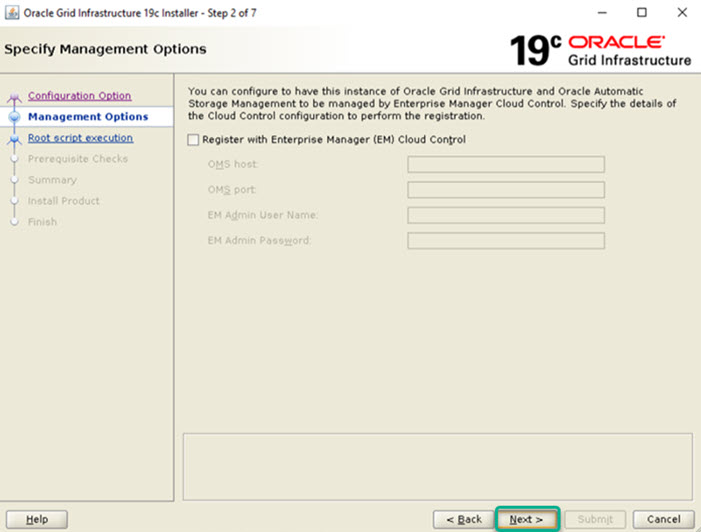

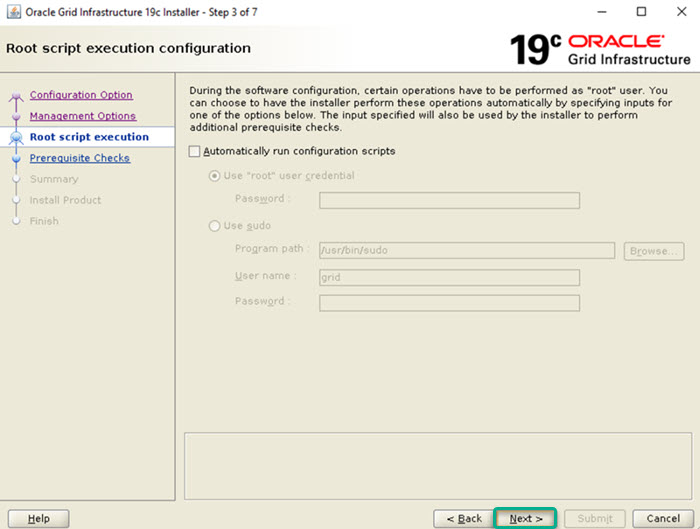

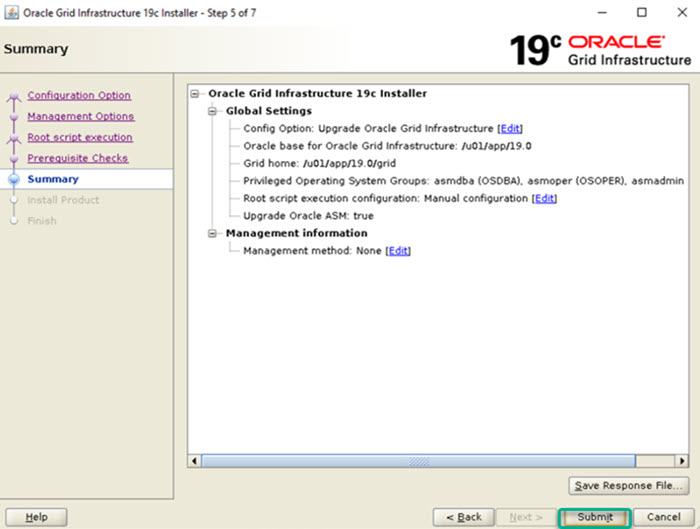

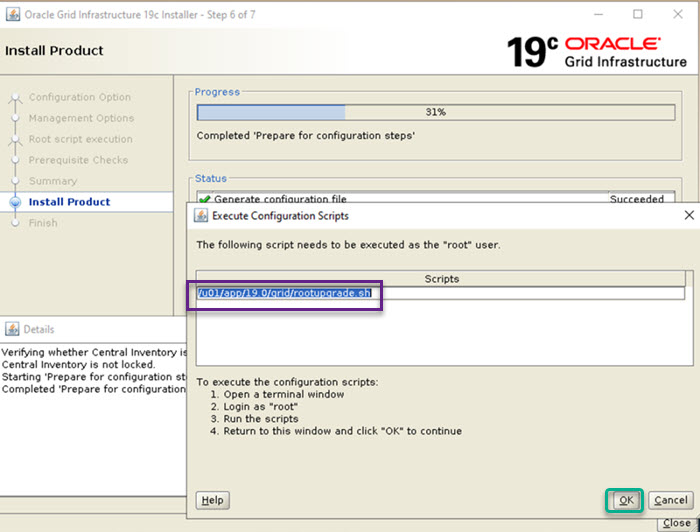

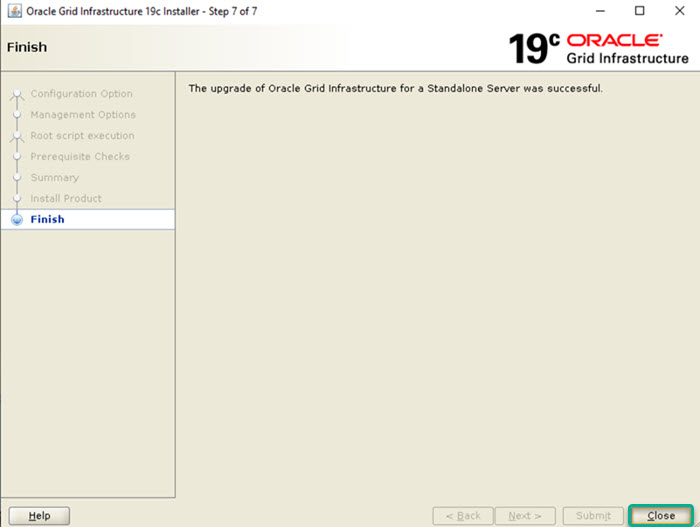

Step 3. Upgrade GI from 12.2 to 19.14: Once the above prechecks are done. Stop all databases using the below single command. Enable GUI in server and invoke GI Installer using gridSetup.sh command and follow below screenshots are shown.

[root@test-machine03 bin]#

[root@test-machine03 bin]# su - oracle

Last login: Mon Feb 14 17:27:35 +03 2022

[oracle@test-machine03 ~]$

[oracle@test-machine03 ~]$ . oraenv

ORACLE_SID = [oracle] ? orcl

The Oracle base has been set to /u01/app/oracle

[oracle@test-machine03 ~]$

[oracle@test-machine03 ~]$

[oracle@test-machine03 ~]$ srvctl stop home -oraclehome $ORACLE_HOME -statefile ~/srv1_dbstate1.dmp

[oracle@test-machine03 ~]$

[oracle@test-machine03 ~]$ ps -ef|grep pmon

grid 2978 1 0 14:44 ? 00:00:00 asm_pmon_+ASM

oracle 4329 4176 0 14:53 pts/0 00:00:00 grep --color=auto pmon

[oracle@test-machine03 ~]$

[oracle@test-machine03 ~]$

[root@test-machine03 ~]#

[root@test-machine03 ~]# su - grid

Last login: Tue Feb 15 14:40:12 +03 2022

[grid@test-machine03 ~]$

[grid@test-machine03 ~]$ export DISPLAY=localhost:10.0

[grid@test-machine03 ~]$

[grid@test-machine03 ~]$ cd /u01/app/19.0/grid/

[grid@test-machine03 grid]$

[grid@test-machine03 grid]$ xclock

Warning: Missing charsets in String to FontSet conversion

[grid@test-machine03 grid]$

[grid@test-machine03 grid]$ pwd

/u01/app/19.0/grid

[grid@test-machine03 grid]$

[grid@test-machine03 grid]$

[grid@test-machine03 grid]$ ./gridSetup.sh

Launching Oracle Grid Infrastructure Setup Wizard...

The response file for this session can be found at:

/u01/app/19.0/grid/install/response/grid_2022-02-15_03-16-24PM.rsp

You can find the log of this install session at:

/u01/app/oraInventory/logs/UpdateNodeList2022-02-15_03-16-24PM.log

[grid@test-machine03 grid]$

[root@test-machine03 bin]#

[root@test-machine03 bin]# /u01/app/19.0/grid/rootupgrade.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/19.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/19.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/19.0/crsdata/test-machine03/crsconfig/roothas_2022-02-15_03-23-41PM.log

2022/02/15 15:23:44 CLSRSC-595: Executing upgrade step 1 of 12: 'UpgPrechecks'.

2022/02/15 15:23:48 CLSRSC-595: Executing upgrade step 2 of 12: 'GetOldConfig'.

2022/02/15 15:23:50 CLSRSC-595: Executing upgrade step 3 of 12: 'GenSiteGUIDs'.

2022/02/15 15:23:50 CLSRSC-595: Executing upgrade step 4 of 12: 'SetupOSD'.

Redirecting to /bin/systemctl restart rsyslog.service

2022/02/15 15:23:51 CLSRSC-595: Executing upgrade step 5 of 12: 'PreUpgrade'.

ASM has been upgraded and started successfully.

2022/02/15 15:24:26 CLSRSC-595: Executing upgrade step 6 of 12: 'UpgradeAFD'.

2022/02/15 15:24:26 CLSRSC-595: Executing upgrade step 7 of 12: 'UpgradeOLR'.

clscfg: EXISTING configuration version 0 detected.

Creating OCR keys for user 'grid', privgrp 'oinstall'..

Operation successful.

2022/02/15 15:24:30 CLSRSC-595: Executing upgrade step 8 of 12: 'UpgradeOCR'.

LOCAL ONLY MODE

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

CRS-4664: Node test-machine03 successfully pinned.

2022/02/15 15:24:33 CLSRSC-595: Executing upgrade step 9 of 12: 'CreateOHASD'.

2022/02/15 15:24:34 CLSRSC-595: Executing upgrade step 10 of 12: 'ConfigOHASD'.

2022/02/15 15:24:34 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.service'

2022/02/15 15:24:57 CLSRSC-595: Executing upgrade step 11 of 12: 'UpgradeSIHA'.

test-machine03 2022/02/15 15:26:18 /u01/app/19.0/crsdata/test-machine03/olr/backup_20220215_152618.olr 3342960164

test-machine03 2022/02/09 17:58:39 /u01/app/12.2/grid/cdata/test-machine03/backup_20220209_175839.olr 0

2022/02/15 15:26:18 CLSRSC-595: Executing upgrade step 12 of 12: 'InstallACFS'.

2022/02/15 15:27:11 CLSRSC-327: Successfully configured Oracle Restart for a standalone server

[root@test-machine03 bin]#

Step 4. Validate GI upgrade to 19.14: After GI upgrade is finished crosscheck all services are online in the grid cluster. Execute the below commands to check cluster version and services status.

[root@test-machine03 bin]#

[root@test-machine03 bin]# ps -ef|grep tns

root 23 2 0 14:38 ? 00:00:00 [netns]

grid 23679 1 0 15:28 ? 00:00:00 /u01/app/19.0/grid/bin/tnslsnr LISTENER -no_crs_notify -inherit

root 24188 2573 0 15:29 pts/0 00:00:00 grep --color=auto tns

[root@test-machine03 bin]#

[root@test-machine03 bin]#

[root@test-machine03 bin]#

[root@test-machine03 app]#

[root@test-machine03 app]# cd 19.0/grid/bin/

[root@test-machine03 bin]#

[root@test-machine03 bin]# pwd

/u01/app/19.0/grid/bin

[root@test-machine03 bin]#

[root@test-machine03 bin]#

[root@test-machine03 bin]# ./crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE test-machine03 STABLE

ora.FRA.dg

ONLINE ONLINE test-machine03 STABLE

ora.LISTENER.lsnr

ONLINE ONLINE test-machine03 STABLE

ora.asm

ONLINE ONLINE test-machine03 Started,STABLE

ora.ons

OFFLINE OFFLINE test-machine03 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.cssd

1 ONLINE ONLINE test-machine03 STABLE

ora.diskmon

1 OFFLINE OFFLINE STABLE

ora.evmd

1 ONLINE ONLINE test-machine03 STABLE

ora.noncdb.db

1 OFFLINE OFFLINE Instance Shutdown,ST

ABLE

ora.orcl.db

1 OFFLINE OFFLINE Instance Shutdown,ST

ABLE

--------------------------------------------------------------------------------

[root@test-machine03 bin]#

[root@test-machine03 bin]# ./crsctl query has releaseversion

Oracle High Availability Services release version on the local node is [19.0.0.0.0]

[root@test-machine03 bin]#

[root@test-machine03 bin]# ./crsctl query has softwareversion

Oracle High Availability Services version on the local node is [19.0.0.0.0]

[root@test-machine03 bin]#

[root@test-machine03 bin]#

[root@test-machine03 bin]#

[root@test-machine03 bin]# su - grid

Last login: Tue Feb 15 15:27:11 +03 2022 on pts/0

[grid@test-machine03 ~]$

[grid@test-machine03 ~]$ export ORACLE_HOME=/u01/app/19.0/grid

[grid@test-machine03 ~]$ export PATH=$PATH:$ORACLE_HOME/bin:$ORACLE_HOME/OPatch

[grid@test-machine03 ~]$ opatch lspatches

33575402;DBWLM RELEASE UPDATE 19.0.0.0.0 (33575402)

33534448;ACFS RELEASE UPDATE 19.14.0.0.0 (33534448)

33529556;OCW RELEASE UPDATE 19.14.0.0.0 (33529556)

33515361;Database Release Update : 19.14.0.0.220118 (33515361)

33239955;TOMCAT RELEASE UPDATE 19.0.0.0.0 (33239955)

OPatch succeeded.

[grid@test-machine03 ~]$

[root@test-machine03 bin]#

[root@test-machine03 bin]# su - oracle

Last login: Tue Feb 15 15:38:19 +03 2022 on pts/0

[oracle@test-machine03 ~]$ . oreanv

-bash: oreanv: No such file or directory

[oracle@test-machine03 ~]$ . oraenv

ORACLE_SID = [oracle] ? orcl

The Oracle base has been set to /u01/app/oracle

[oracle@test-machine03 ~]$

[oracle@test-machine03 ~]$ srvctl start home -oraclehome $ORACLE_HOME -statefile ~/srv1_dbstate1.dmp

[oracle@test-machine03 ~]$

[root@test-machine03 bin]#

[root@test-machine03 bin]# ./crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE test-machine03 STABLE

ora.FRA.dg

ONLINE ONLINE test-machine03 STABLE

ora.LISTENER.lsnr

ONLINE ONLINE test-machine03 STABLE

ora.asm

ONLINE ONLINE test-machine03 Started,STABLE

ora.ons

OFFLINE OFFLINE test-machine03 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.cssd

1 ONLINE ONLINE test-machine03 STABLE

ora.diskmon

1 OFFLINE OFFLINE STABLE

ora.evmd

1 ONLINE ONLINE test-machine03 STABLE

ora.noncdb.db

1 ONLINE ONLINE test-machine03 Open,HOME=/u01/app/o

racle/product/12.2/d

b_1,STABLE

ora.orcl.db

1 ONLINE ONLINE test-machine03 Open,HOME=/u01/app/o

racle/product/12.2/d

b_1,STABLE

--------------------------------------------------------------------------------

[root@test-machine03 bin]#

Step 5. Download autoupgrade: Download from MOS AutoUpgrade Tool (Doc ID 2485457.1)

[root@test-machine03 ~]#

[root@test-machine03 ~]# cd /u01/19c_dbupgrade

[root@test-machine03 19c_dbupgrade]#

[root@test-machine03 19c_dbupgrade]# ls -ltr

total 2932

-rw-r--r--. 1 root root 2999632 Feb 15 18:05 autoupgrade.jar

[root@test-machine03 19c_dbupgrade]# chown oracle:oinstall autoupgrade.jar

[root@test-machine03 19c_dbupgrade]#

[root@test-machine03 19c_dbupgrade]# su - oracle

Last login: Tue Feb 15 16:37:47 +03 2022 on pts/2

[oracle@test-machine03 ~]$

[oracle@test-machine03 ~]$ cd /u01/19c_dbupgrade

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$ ls -l

total 2932

-rw-r--r--. 1 oracle oinstall 2999632 Feb 15 18:05 autoupgrade.jar

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$ java -jar autoupgrade.jar -version

build.hash 081e3f7

build.version 21.3.211115

build.date 2021/11/15 11:57:54

build.max_target_version 21

build.supported_target_versions 12.2,18,19,21

build.type production

[oracle@test-machine03 19c_dbupgrade]$

Step 6. Create autoupgrade configuration file: Create a sample config file using the below command and edit accordingly as per the environment. We will use location: /u01/19c_dbupgrade/autoupgrade as log directory.

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$ java -jar autoupgrade.jar -create_sample_file config

Created sample configuration file /u01/19c_dbupgrade/sample_config.cfg

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$ ls -ltr

total 2940

-rw-r--r--. 1 oracle oinstall 2999632 Feb 15 18:05 autoupgrade.jar

-rw-r--r--. 1 oracle oinstall 5878 Feb 16 11:25 sample_config.cfg

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$ mkdir -p /u01/19c_dbupgrade/autoupgrade

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$ vi 19c_config.cfg

global.autoupg_log_dir=/u01/19c_dbupgrade/autoupgrade

upg1.log_dir=/u01/19c_dbupgrade/autoupgrade

upg1.sid=noncdb

upg1.source_home=/u01/app/oracle/product/12.2/db_1

upg1.target_home=/u01/app/oracle/product/19.0/db_1

upg1.start_time=NOW

upg1.upgrade_node=localhost

upg1.run_utlrp=yes

upg1.timezone_upg=yes

upg1.target_version=19

upg2.log_dir=/u01/19c_dbupgrade/autoupgrade

upg2.sid=orcl

upg2.source_home=/u01/app/oracle/product/12.2/db_1

upg2.target_home=/u01/app/oracle/product/19.0/db_1

upg2.start_time=NOW

upg2.upgrade_node=localhost

upg2.run_utlrp=yes

upg2.timezone_upg=yes

upg2.target_version=19

:wq!

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$ pwd

/u01/19c_dbupgrade

[oracle@test-machine03 19c_dbupgrade]$ ls -l

total 2944

-rw-r--r--. 1 oracle oinstall 964 Feb 16 11:49 19c_config.cfg

drwxr-xr-x. 2 oracle oinstall 6 Feb 16 11:50 autoupgrade

-rw-r--r--. 1 oracle oinstall 2999632 Feb 15 18:05 autoupgrade.jar

-rw-r--r--. 1 oracle oinstall 5878 Feb 16 11:25 sample_config.cfg

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$

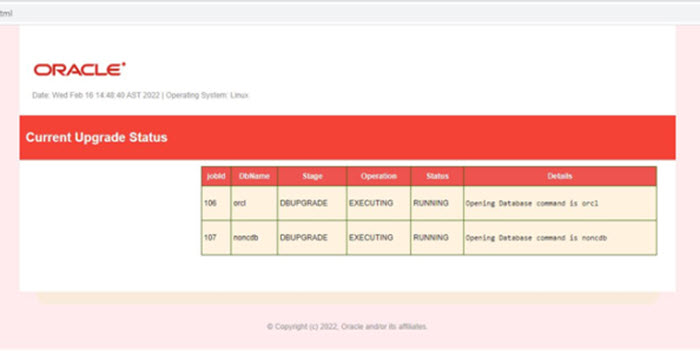

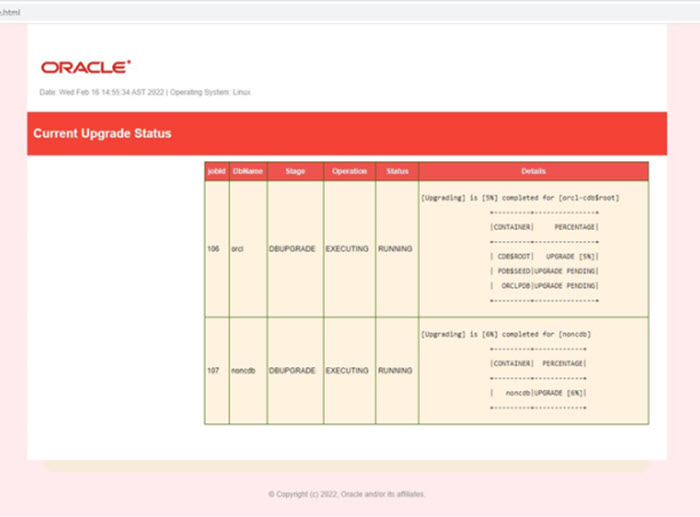

Step 7. Upgrade database from 12.2 to 19.14 using autoupgrade: Run autoupgrade first in analyze mode. Review the status.html file for any potential error which can fail Upgrade. Run autoupgrade in fixup mode to run prefixup script and once fixup command is finished review status.html file. Once the above two steps are done and no ERROR in status.html procced with deploy mode where actual downtime will start and autoupgrade will perform actual upgrade steps. If you wish to monitor upgrade in the web browser instead of executing lsj command frequently you can start http server using command “python -m SimpleHTTPServer 8080” Use url: “http://<server-ip>:8080/state.html” to monitor upgrade status directly in this URL.

Analyze Mode

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$ java -jar autoupgrade.jar -config 19c_config.cfg -mode analyze

AutoUpgrade is not fully tested on OpenJDK 64-Bit Server VM, Oracle recommends to use Java HotSpot(TM)

AutoUpgrade 21.3.211115 launched with default options

Processing config file ...

+--------------------------------+

| Starting AutoUpgrade execution |

+--------------------------------+

2 databases will be analyzed

Type 'help' to list console commands

upg>

upg> LSJ

Unrecognized cmd: LSJ

upg> lsj

+----+-------+---------+---------+-------+--------------+--------+----------------------------+

|Job#|DB_NAME| STAGE|OPERATION| STATUS| START_TIME| UPDATED| MESSAGE|

+----+-------+---------+---------+-------+--------------+--------+----------------------------+

| 102| orcl|PRECHECKS|PREPARING|RUNNING|22/02/16 14:12|14:12:45|Loading database information|

| 103| noncdb|PRECHECKS|PREPARING|RUNNING|22/02/16 14:12|14:13:38| Remaining 51/101|

+----+-------+---------+---------+-------+--------------+--------+----------------------------+

Total jobs 2

upg> Job 103 completed

Job 102 completed

------------------- Final Summary --------------------

Number of databases [ 2 ]

Jobs finished [2]

Jobs failed [0]

Jobs pending [0]

Please check the summary report at:

/u01/19c_dbupgrade/autoupgrade/cfgtoollogs/upgrade/auto/status/status.html

/u01/19c_dbupgrade/autoupgrade/cfgtoollogs/upgrade/auto/status/status.log

[oracle@test-machine03 19c_dbupgrade]$

Fixup Mode

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$ java -jar autoupgrade.jar -config 19c_config.cfg -mode fixups

AutoUpgrade is not fully tested on OpenJDK 64-Bit Server VM, Oracle recommends to use Java HotSpot(TM)

AutoUpgrade 21.3.211115 launched with default options

Processing config file ...

+--------------------------------+

| Starting AutoUpgrade execution |

+--------------------------------+

2 databases will be processed

Type 'help' to list console commands

upg>

upg> lsj

+----+-------+---------+---------+--------+--------------+--------+----------------------------+

|Job#|DB_NAME| STAGE|OPERATION| STATUS| START_TIME| UPDATED| MESSAGE|

+----+-------+---------+---------+--------+--------------+--------+----------------------------+

| 104| orcl|PRECHECKS|PREPARING| RUNNING|22/02/16 14:24|14:24:26|Loading database information|

| 105| noncdb| SETUP|PREPARING|FINISHED|22/02/16 14:24|14:24:24| Scheduling|

+----+-------+---------+---------+--------+--------------+--------+----------------------------+

Total jobs 2

upg> status -job 104

Progress

-----------------------------------

Start time: 22/02/16 14:24

Elapsed (min): 0

End time: N/A

Last update: 2022-02-16T14:24:26.753

Stage: PRECHECKS

Operation: PREPARING

Status: RUNNING

Pending stages: 2

Stage summary:

SETUP <1 min

PRECHECKS <1 min (IN PROGRESS)

Job Logs Locations

-----------------------------------

Logs Base: /u01/19c_dbupgrade/autoupgrade/orcl

Job logs: /u01/19c_dbupgrade/autoupgrade/orcl/104

Stage logs: /u01/19c_dbupgrade/autoupgrade/orcl/104/prechecks

TimeZone: /u01/19c_dbupgrade/autoupgrade/orcl/temp

Additional information

-----------------------------------

Details:

Checks

Error Details:

None

upg>

upg> status -job 105

Progress

-----------------------------------

Start time: 22/02/16 14:24

Elapsed (min): 0

End time: N/A

Last update: 2022-02-16T14:24:46.931

Stage: PREFIXUPS

Operation: EXECUTING

Status: RUNNING

Pending stages: 1

Stage summary:

SETUP <1 min

PRECHECKS <1 min

PREFIXUPS <1 min (IN PROGRESS)

Job Logs Locations

-----------------------------------

Logs Base: /u01/19c_dbupgrade/autoupgrade/noncdb

Job logs: /u01/19c_dbupgrade/autoupgrade/noncdb/105

Stage logs: /u01/19c_dbupgrade/autoupgrade/noncdb/105/prefixups

TimeZone: /u01/19c_dbupgrade/autoupgrade/noncdb/temp

Additional information

-----------------------------------

Details:

+--------+---------------------+-------+

|DATABASE| FIXUP| STATUS|

+--------+---------------------+-------+

| noncdb|INVALID_OBJECTS_EXIST|STARTED|

+--------+---------------------+-------+

Error Details:

None

upg> lsj

+----+-------+---------+---------+-------+--------------+--------+-----------------+

|Job#|DB_NAME| STAGE|OPERATION| STATUS| START_TIME| UPDATED| MESSAGE|

+----+-------+---------+---------+-------+--------------+--------+-----------------+

| 104| orcl|PRECHECKS|PREPARING|RUNNING|22/02/16 14:24|14:24:54|Remaining 176/251|

| 105| noncdb|PREFIXUPS|EXECUTING|RUNNING|22/02/16 14:24|14:24:46| Remaining 3/3|

+----+-------+---------+---------+-------+--------------+--------+-----------------+

Total jobs 2

upg>

upg> lsj

+----+-------+---------+---------+-------+--------------+--------+----------------------------+

|Job#|DB_NAME| STAGE|OPERATION| STATUS| START_TIME| UPDATED| MESSAGE|

+----+-------+---------+---------+-------+--------------+--------+----------------------------+

| 104| orcl|PREFIXUPS|EXECUTING|RUNNING|22/02/16 14:24|14:28:02| Remaining 5/6|

| 105| noncdb|PREFIXUPS|EXECUTING|RUNNING|22/02/16 14:24|14:27:24|Loading database information|

+----+-------+---------+---------+-------+--------------+--------+----------------------------+

Total jobs 2

upg> Job 105 completed

upg> lsj

+----+-------+---------+---------+--------+--------------+--------+----------------------------+

|Job#|DB_NAME| STAGE|OPERATION| STATUS| START_TIME| UPDATED| MESSAGE|

+----+-------+---------+---------+--------+--------------+--------+----------------------------+

| 104| orcl|PREFIXUPS|EXECUTING| RUNNING|22/02/16 14:24|14:30:04|Loading database information|

| 105| noncdb|PREFIXUPS| STOPPED|FINISHED|22/02/16 14:24|14:28:50|Loading database information|

+----+-------+---------+---------+--------+--------------+--------+----------------------------+

Total jobs 2

upg> Job 104 completed

------------------- Final Summary --------------------

Number of databases [ 2 ]

Jobs finished [2]

Jobs failed [0]

Jobs pending [0]

Please check the summary report at:

/u01/19c_dbupgrade/autoupgrade/cfgtoollogs/upgrade/auto/status/status.html

/u01/19c_dbupgrade/autoupgrade/cfgtoollogs/upgrade/auto/status/status.log

[oracle@test-machine03 19c_dbupgrade]$

Deploy Mode

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 19c_dbupgrade]$ java -jar autoupgrade.jar -config 19c_config.cfg -mode deploy

AutoUpgrade is not fully tested on OpenJDK 64-Bit Server VM, Oracle recommends to use Java HotSpot(TM)

AutoUpgrade 21.3.211115 launched with default options

Processing config file ...

+--------------------------------+

| Starting AutoUpgrade execution |

+--------------------------------+

2 databases will be processed

Type 'help' to list console commands

upg>

upg> lsj

+----+-------+---------+---------+--------+--------------+--------+----------+

|Job#|DB_NAME| STAGE|OPERATION| STATUS| START_TIME| UPDATED| MESSAGE|

+----+-------+---------+---------+--------+--------------+--------+----------+

| 106| orcl|PRECHECKS|PREPARING|FINISHED|22/02/16 14:41|14:41:24| |

| 107| noncdb| SETUP|PREPARING|FINISHED|22/02/16 14:42|14:41:20|Scheduling|

+----+-------+---------+---------+--------+--------------+--------+----------+

Total jobs 2

upg>

upg>

upg> lsj

+----+-------+----------+---------+-------+--------------+--------+------------------+

|Job#|DB_NAME| STAGE|OPERATION| STATUS| START_TIME| UPDATED| MESSAGE|

+----+-------+----------+---------+-------+--------------+--------+------------------+

| 106| orcl| DBUPGRADE|EXECUTING|RUNNING|22/02/16 14:41|15:45:33|0%Upgraded ORCLPDB|

| 107| noncdb|POSTFIXUPS|EXECUTING|RUNNING|22/02/16 14:42|15:46:17| Remaining 4/4|

+----+-------+----------+---------+-------+--------------+--------+------------------+

Total jobs 2

upg> Job 107 completed

upg> lsj

+----+-------+----------+---------+--------+--------------+--------+-----------------+

|Job#|DB_NAME| STAGE|OPERATION| STATUS| START_TIME| UPDATED| MESSAGE|

+----+-------+----------+---------+--------+--------------+--------+-----------------+

| 106| orcl|POSTFIXUPS|EXECUTING| RUNNING|22/02/16 14:41|16:47:19| Remaining 3/12|

| 107| noncdb|SYSUPDATES| STOPPED|FINISHED|22/02/16 14:42|16:10:13|Completed job 107|

+----+-------+----------+---------+--------+--------------+--------+-----------------+

Total jobs 2

upg>

upg> lsj

+----+-------+----------+---------+--------+--------------+--------+-----------------+

|Job#|DB_NAME| STAGE|OPERATION| STATUS| START_TIME| UPDATED| MESSAGE|

+----+-------+----------+---------+--------+--------------+--------+-----------------+

| 106| orcl|SYSUPDATES|PREPARING| RUNNING|22/02/16 14:41|16:54:08| |

| 107| noncdb|SYSUPDATES| STOPPED|FINISHED|22/02/16 14:42|16:10:13|Completed job 107|

+----+-------+----------+---------+--------+--------------+--------+-----------------+

Total jobs 2

upg> Job 106 completed

------------------- Final Summary --------------------

Number of databases [ 2 ]

Jobs finished [2]

Jobs failed [0]

Jobs pending [0]

---- Drop GRP at your convenience once you consider it is no longer needed ----

Drop GRP from noncdb: drop restore point AUTOUPGRADE_9212_NONCDB122010

Drop GRP from orcl: drop restore point AUTOUPGRADE_9212_ORCL122010

Please check the summary report at:

/u01/19c_dbupgrade/autoupgrade/cfgtoollogs/upgrade/auto/status/status.html

/u01/19c_dbupgrade/autoupgrade/cfgtoollogs/upgrade/auto/status/status.log

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 fra]$

[oracle@test-machine03 fra]$ cd /u01/19c_dbupgrade/autoupgrade/cfgtoollogs/upgrade/auto

[oracle@test-machine03 19c_dbupgrade]$

[oracle@test-machine03 auto]$ ls -l

total 1372

-rwx------. 1 oracle oinstall 672 Feb 16 14:41 autoupgrade_err.log

-rwx------. 1 oracle oinstall 0 Feb 16 14:41 autoupgrade_err.log.lck

-rwx------. 1 oracle oinstall 927188 Feb 16 14:46 autoupgrade.log

-rwx------. 1 oracle oinstall 0 Feb 16 14:41 autoupgrade.log.lck

-rwx------. 1 oracle oinstall 3752 Feb 16 14:41 autoupgrade_user.log

-rwx------. 1 oracle oinstall 0 Feb 16 14:41 autoupgrade_user.log.lck

drwx------. 2 oracle oinstall 4096 Feb 16 14:41 config_files

drwx------. 2 oracle oinstall 18 Feb 16 14:41 lock

drwx------. 2 oracle oinstall 23 Feb 16 14:41 sql

-rwx------. 1 oracle oinstall 11932 Feb 16 14:46 state.html

drwx------. 2 oracle oinstall 4096 Feb 16 14:44 status

[oracle@test-machine03 auto]$ python -m SimpleHTTPServer 8080

Serving HTTP on 0.0.0.0 port 8080 ...

192.168.114.169 - - [16/Feb/2022 14:47:22] "GET /state.html HTTP/1.1" 200 -

192.168.114.169 - - [16/Feb/2022 14:47:23] code 404, message File not found

192.168.114.169 - - [16/Feb/2022 14:47:23] "GET /favicon.ico HTTP/1.1" 404 -

Step 8. Validate DB upgrade to 19.14: Validate components in the upgraded database and all components should be in valid status.

[oracle@test-machine03 auto]$

[oracle@test-machine03 auto]$ cat /etc/oratab

#

orcl:/u01/app/oracle/product/19.0/db_1:N

noncdb:/u01/app/oracle/product/19.0/db_1:N # line added by Agent

+ASM:/u01/app/19.0/grid:N # line added by Agent

[oracle@test-machine03 auto]$

[oracle@test-machine03 auto]$

[oracle@test-machine03 auto]$ cd /u01/app/oracle/product/19.0/db_1/dbs

[oracle@test-machine03 dbs]$ ls -l

total 28

-rw-rw----. 1 oracle asmadmin 1544 Feb 16 16:10 hc_noncdb.dat

-rw-rw----. 1 oracle asmadmin 1544 Feb 16 16:54 hc_orcl.dat

-rw-r--r--. 1 oracle oinstall 3079 May 14 2015 init.ora

-rw-r-----. 1 oracle asmadmin 24 Feb 16 14:48 lkNONCDB

-rw-r-----. 1 oracle asmadmin 24 Feb 16 14:48 lkORCL

-rw-r-----. 1 oracle oinstall 3584 Feb 16 16:10 orapwnoncdb

-rw-r-----. 1 oracle oinstall 3584 Feb 16 16:54 orapworcl

[oracle@test-machine03 dbs]$

[oracle@test-machine03 auto]$

[oracle@test-machine03 auto]$ sqlplus sys as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Wed Feb 16 17:08:52 2022

Version 19.14.0.0.0

Copyright (c) 1982, 2021, Oracle. All rights reserved.

Enter password:

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.14.0.0.0

SQL>

SQL> show con_name

CON_NAME

------------------------------

CDB$ROOT

SQL>

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 ORCLPDB READ WRITE NO

SQL>

SET LINESIZE 500

SET PAGESIZE 1000

SET SERVEROUT ON

SET LONG 2000000

COLUMN action_time FORMAT A12

COLUMN action FORMAT A10

COLUMN patch_type FORMAT A10

COLUMN description FORMAT A32

COLUMN status FORMAT A10

COLUMN version FORMAT A10

select CON_ID,

TO_CHAR(action_time, 'YYYY-MM-DD') AS action_time,

PATCH_ID,

ACTION,

STATUS,

SOURCE_VERSION,

TARGET_VERSION,

DESCRIPTION

from CDB_REGISTRY_SQLPATCH

order by CON_ID, action_time, patch_id;

CON_ID ACTION_TIME PATCH_ID ACTION STATUS SOURCE_VERSION TARGET_VERSION DESCRIPTION

---------- ------------ ---------- ---------- ---------- --------------- --------------- --------------------------------

1 2022-02-16 33515361 APPLY SUCCESS 19.1.0.0.0 19.14.0.0.0 Database Release Update : 19.14.

0.0.220118 (33515361)

3 2022-02-16 33515361 APPLY SUCCESS 19.1.0.0.0 19.14.0.0.0 Database Release Update : 19.14.

0.0.220118 (33515361)

set lines 300

set pages 300

COL comp_id for a15

COL status for a10

COL version for a15

COL comp_name for a40

SELECT CON_ID,SUBSTR(comp_id,1,15) comp_id, status, SUBSTR(version,1,10) version, SUBSTR(comp_name,1,40) comp_name FROM cdb_registry;

CON_ID COMP_ID STATUS VERSION COMP_NAME

---------- --------------- ---------- --------------- ----------------------------------------

3 CATALOG VALID 19.0.0.0.0 Oracle Database Catalog Views

3 CATPROC VALID 19.0.0.0.0 Oracle Database Packages and Types

3 RAC OPTION OFF 19.0.0.0.0 Oracle Real Application Clusters

3 XDB VALID 19.0.0.0.0 Oracle XML Database

3 OWM VALID 19.0.0.0.0 Oracle Workspace Manager

1 CATALOG VALID 19.0.0.0.0 Oracle Database Catalog Views

1 CATPROC VALID 19.0.0.0.0 Oracle Database Packages and Types

1 JAVAVM VALID 19.0.0.0.0 JServer JAVA Virtual Machine

1 XML VALID 19.0.0.0.0 Oracle XDK

1 CATJAVA VALID 19.0.0.0.0 Oracle Database Java Packages

1 APS VALID 19.0.0.0.0 OLAP Analytic Workspace

1 RAC OPTION OFF 19.0.0.0.0 Oracle Real Application Clusters

1 XDB VALID 19.0.0.0.0 Oracle XML Database

1 OWM VALID 19.0.0.0.0 Oracle Workspace Manager

1 CONTEXT VALID 19.0.0.0.0 Oracle Text

1 ORDIM VALID 19.0.0.0.0 Oracle Multimedia

1 SDO VALID 19.0.0.0.0 Spatial

1 XOQ VALID 19.0.0.0.0 Oracle OLAP API

1 APEX VALID 5.0.4.00.1 Oracle Application Express

19 rows selected.

SQL>

SQL> set lines 300

SQL> select name, to_char(scn), time from v$restore_point;

NAME TO_CHAR(SCN) TIME

-------------------------------------------------------------------------------------------------------------------------------- ---------------------------------------- ---------------------------------------------------------------------------

AUTOUPGRADE_9212_ORCL122010 2625542 16-FEB-22 02.41.21.000000000 PM

SQL> drop restore point AUTOUPGRADE_9212_ORCL122010;

Restore point dropped.

SQL>

############################################ NonCDB DB #########################################

SET LINESIZE 500

SET PAGESIZE 1000

SET SERVEROUT ON

SET LONG 2000000

COLUMN action_time FORMAT A12

COLUMN action FORMAT A10

COLUMN patch_type FORMAT A10

COLUMN description FORMAT A32

COLUMN status FORMAT A10

COLUMN version FORMAT A10

select

TO_CHAR(action_time, 'YYYY-MM-DD') AS action_time,

PATCH_ID,

ACTION,

STATUS,

SOURCE_VERSION,

TARGET_VERSION,

DESCRIPTION

from DBA_REGISTRY_SQLPATCH

order by action_time, patch_id;

ACTION_TIME PATCH_ID ACTION STATUS SOURCE_VERSION TARGET_VERSION DESCRIPTION

------------ ---------- ---------- ---------- --------------- --------------- --------------------------------

2022-02-16 33515361 APPLY SUCCESS 19.1.0.0.0 19.14.0.0.0 Database Release Update : 19.14.

0.0.220118 (33515361)

set lines 300

set pages 300

COL comp_id for a15

COL status for a10

COL version for a15

COL comp_name for a40

SELECT SUBSTR(comp_id,1,15) comp_id, status, SUBSTR(version,1,10) version, SUBSTR(comp_name,1,40) comp_name FROM dba_registry;

COMP_ID STATUS VERSION COMP_NAME

--------------- ---------- --------------- ----------------------------------------

CATALOG VALID 19.0.0.0.0 Oracle Database Catalog Views

CATPROC VALID 19.0.0.0.0 Oracle Database Packages and Types

JAVAVM VALID 19.0.0.0.0 JServer JAVA Virtual Machine

XML VALID 19.0.0.0.0 Oracle XDK

CATJAVA VALID 19.0.0.0.0 Oracle Database Java Packages

APS VALID 19.0.0.0.0 OLAP Analytic Workspace

RAC OPTION OFF 19.0.0.0.0 Oracle Real Application Clusters

XDB VALID 19.0.0.0.0 Oracle XML Database

OWM VALID 19.0.0.0.0 Oracle Workspace Manager

CONTEXT VALID 19.0.0.0.0 Oracle Text

ORDIM VALID 19.0.0.0.0 Oracle Multimedia

SDO VALID 19.0.0.0.0 Spatial

XOQ VALID 19.0.0.0.0 Oracle OLAP API

APEX VALID 5.0.4.00.1 Oracle Application Express

SQL>

SQL> select name, to_char(scn), time from v$restore_point;

NAME TO_CHAR(SCN) TIME

-------------------------------------------------------------------------------------------------------------------------------- ---------------------------------------- ---------------------------------------------------------------------------

AUTOUPGRADE_9212_NONCDB122010 1868179 16-FEB-22 02.42.42.000000000 PM

SQL> drop restore point AUTOUPGRADE_9212_NONCDB122010;

Restore point dropped.

SQL>

SQL>

This document is just for learning purpose and always validate in the LAB environment first before applying in the LIVE environment.

Hope so you like this article!

Please share your valuable feedback/comments/subscribe and follow us below and don’t forget to click on the bell icon to get the most recent update. Click here to understand more about our pursuit.

Related Articles

- Oracle Critical Database Patch ID for January 2025 along with enabled Download Link

- Oracle Critical Database Patch ID for October 2024 along with enabled Download Link

- Oracle Critical Database Patch ID for July 2024 along with enabled Download Link

- Oracle Critical Database Patch ID for April 2024 along with enabled Download Link

- Step by Step Manual Upgrade Container (CDB-PDB) Database from 12c to 19c in Multitenant Architecture

![]()